Joris van Eijnatten is professor of cultural history at Utrecht University, The Netherlands. He has a fascination for numbers that not many historians have. Last year he was the research fellow for digital humanities at the National Library of The Netherlands, where he applied text and data mining to study the image people have of Europe based on newspapers. I interviewed him about text and data mining in humanities, his work and his personal romance with numbers.

It seems you have a great interest in numbers. How did this start?

Maybe it is because of my science background: I started out by studying geology and switched to history later on. I’m just interested in numbers, I don’t know why. During a postdoc I already played around with Corpus Linguistics software, during a time when still nobody else did. It calculates the probability of one word being in the proximity of the other. At that time this was still done manually. So at an early stage in my career, I already got interested in numbers, transporting things into frequencies.

Could I say that you have a scientists’ approach to humanities?

I think so. I have an interest in numbers, not many historians do. Traditionally, there are different kinds of historians. In cultural history it’s usually the ‘soft’ kind. In socio-economic history people are already more into numbers. They look for historical patterns and use large databases.

Do you think the digital component of humanities will grow further?

We now have a group of 25-30 cultural historians, with only 4 or 5 digital historians. The rest does history in the traditional way. But you can see that several things are happening right now. Traditional history will not disappear. You always need people to read texts and understand them. I think that will always be necessary. But I do think more people will get into digital humanities. A good side effect is that all historians are forced to think about their methodology more. That’s a good development.

Do you also see this change in education?

Historians tend to be conservative. Students want to study history because they want to go into the archive, it’s romantic. Many of them are frightened by a graph and they know very little about statistics. Now I am trying to get a data course for first year students. I think all of them should be confronted with digital humanities during their studies. Now they are not very digitally literate. If they see a graph for instance, they may not be very critical about it. And that’s dangerous. They should read and analyze critically. They should find out what’s going on underneath that graph.

Do you think there also could be a romantic aspect to numbers?

I have a romance with numbers, haha. But no, it’s not the same. What many people don’t realize is that the archive is already a selection. First of all not everything gets in, then whole archives get lost because of a fire or a war. But what does that archive tell you?

That is different from trying to understand what a set of numbers means, as part of a larger series.

Now let’s talk about your work at the National Library. You studied the image people have of Europe based on newspapers in the archive. Can you tell a bit more about this?

What interests me is the democratic deficit. Basically you can divide society into the people and the elite. The elite thinks Europe is good and it’s something you should want. The other people think it’s no big deal, Brussels is far away and we should focus on our own country. So what I wanted to know is: what could people have known about Europe in the past?

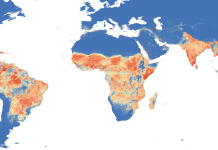

I used a communication theory borrowed from propaganda theory. It says that if you repeat a message over and over again, it gets allocated in people’s minds. Now think about newspapers. If people read something in there every day, it becomes part of their mindset. I looked at weather reports, which give geographical descriptions of Europe. And I wanted to know if it influences peoples’ mindset. So I looked at which places, towns, they mention. And then you see in Dutch newspapers that it’s mainly western Europe. Those are the regions that are mentioned the most. May be that’s why people feel eastern Europe is so far away.

I also looked at British weather maps, and it’s mainly focused on London, a bit of Scotland…and that’s it. It’s very self-focused. So there you have Brexit.

I also looked at narratives, sports, other common things. What kind of frame do people get? That’s what interests me.

One of the problems with text and data mining, is access to content. Do you feel that content was missing in your case?

Well I looked for instance at the Times, and that was a commercial dataset. But I think there are still too little data available. Look at the National Library of The Netherlands. They only have 15% of the newspapers digitally available. And in general, the available content is heavy on American and English. With German stuff you often have the problem that it’s highly copyrighted. And now we’re only talking about newspapers, think about books, administrations, handwritings… There are still hundreds of kilometers of archive that haven’t been digitized yet. Luckily historians are used to the data not being there, so we can still work with it.

What tools did you use for your research? Could you use existing tools off the shelf?

During the research fellowship I learned to code myself, so I can understand what a developer is doing. And I started doing simple things myself. Digital humanities requires that you know what coding is. Otherwise there are a lot of thing you cannot do. So I learned Python for example, which was very interesting and fun. It helps me to be able to adapt existing software and to write code to do simple things. What I do is radical simplicity: I keep it simple, but in a way that can give new results.

My colleague at the National Library also developed a tool that now everyone can use. And then there are some tools I came across, like stuff from GitHub. Open access software.

And SPSS, what many people don’t know is that next to the statistics module, it also has a modeler now which allows you to do text analysis, sentiments for example. I found it not very useful as a historian, but it was useful to clean data.

One very complicated tool I used for the project Translantis is called Texcavater. It allows you to make word clouds, timelines, construct databases.

Now we also have a model in ShiCo, which does very complicated things, like word embeddings.

A lot of text mining has to do with distribution of words, are words in each other’s proximity? If relationships between words occur in different texts, it means something. Say you have 10,000 texts, which is big data in humanities. Is there a pattern of words occurring in each other’s proximity? ShiCo uses the principle of distributions in different ways, in three dimensional space actually. It requires very advanced programming skills that I don’t have. But at least now I do understand how it works.

Why was it necessary to modify tools and develop new ones?

A lot of code has already been written, and a lot of money went into this. But lots of it cannot be used in a field like history. There is no one-size-fits all solution. I think in general there are three categories. 1. There is a lot of software that isn’t useful, for instance because it’s too experimental. 2. There are very useful bits and pieces on GitHub, that just need a bit of adjusting. 3. Sometimes software is too complicated, and I need a developer. But I do want to be able to understand how it works myself, at least the basics.

By Martine Oudenhoven, LIBER